GenAI Story Weaver: Automated Daily Bedtime Tales

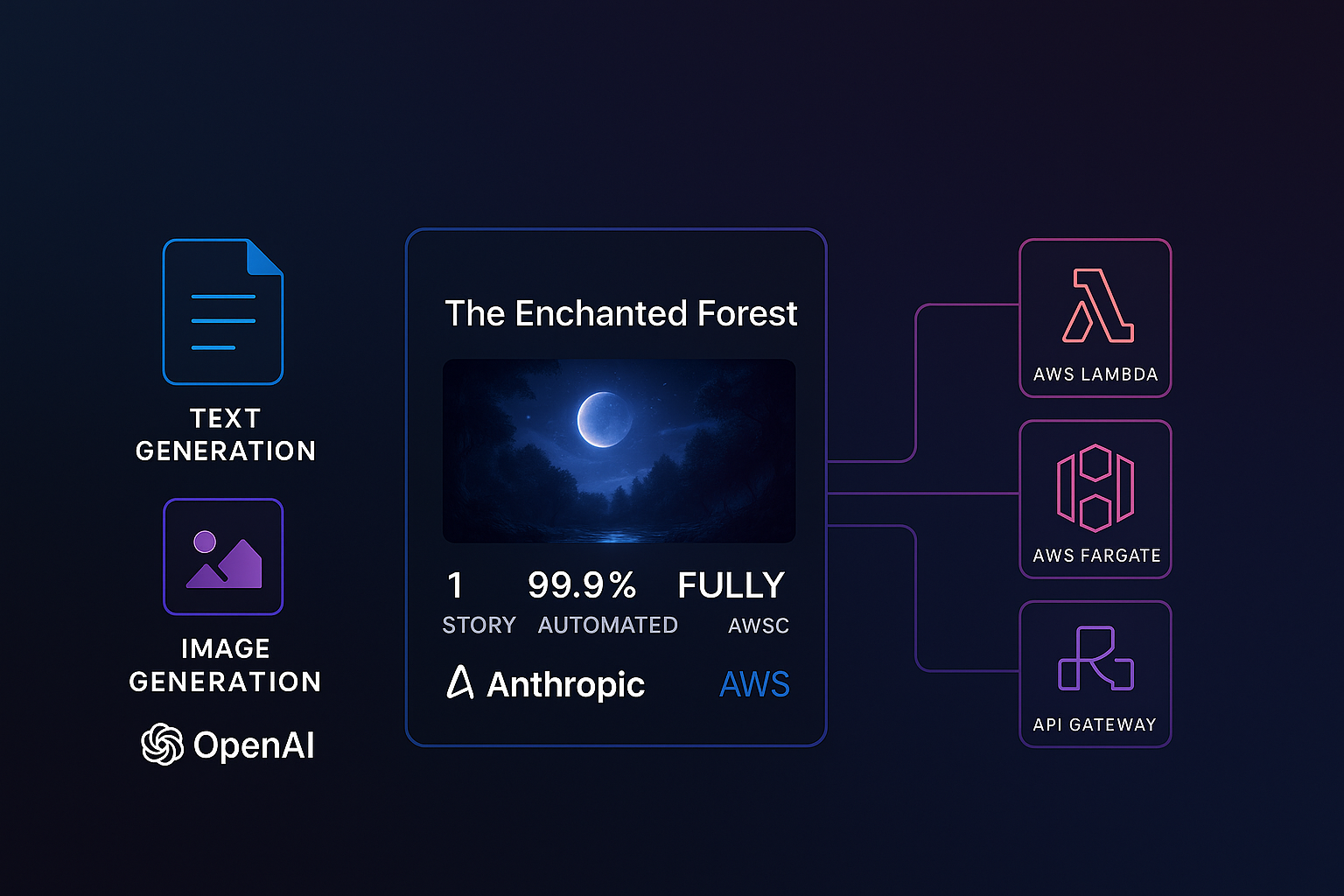

A cloud-native application leveraging Generative AI (OpenAI & Anthropic LLMs) to automatically create and publish unique daily bedtime stories with images, deployed on a robust AWS serverless and containerized infrastructure managed with Terraform.

Project Overview

GenAI Story Weaver is a personal initiative showcasing advanced application of Generative AI and modern cloud-native architectures. The core of the project is an automated pipeline that crafts unique, engaging bedtime stories each day, complete with custom-generated imagery, and publishes them to a dynamic web interface.

The backend leverages Large Language Models from OpenAI and Anthropic for sophisticated story narrative generation and compelling visual creation, ensuring fresh and diverse content daily. This AI-driven content creation is orchestrated through a serverless architecture on AWS, utilizing Lambda functions for event-driven processing and AWS Fargate for containerized application components. The entire system, deployed and managed via Terraform, is designed for high availability, scalability, resilience, and cost-efficiency, incorporating Application Load Balancers for traffic distribution. Continuous integration and deployment are managed through GitHub Actions, ensuring automated builds, testing, and deployments.

The frontend, built with Next.js, provides a responsive and engaging user experience, consuming the AI-generated content through a serverless API layer built with Amazon API Gateway and Lambda. This project demonstrates an end-to-end application of AI in content creation, coupled with sophisticated cloud deployment and operational practices.

Key Features

- Automated Daily Content: Generative AI pipeline using OpenAI and Anthropic LLMs for daily creation of unique bedtime stories and accompanying images.

- Serverless Backend: Core logic and AI orchestration run on AWS Lambda, ensuring scalability and pay-per-use efficiency.

- Containerized Components: Utilizes Docker and AWS Fargate for deploying specific application services, offering portability and consistent environments.

- Cloud-Native Architecture on AWS: Designed with API Gateway, S3 (for story/image storage), DynamoDB (for metadata/content management), Application Load Balancer, and other core AWS services.

- Infrastructure as Code (IaC): Entire AWS infrastructure provisioned and managed using Terraform.

- CI/CD Automation: Automated build, test, and deployment pipelines via GitHub Actions.

- Dynamic Frontend: Next.js (React) application for SSR or SSG of stories, ensuring optimal performance and SEO.

- API-Driven: Decoupled architecture with a serverless API layer for content delivery.

Technologies Used

Project Details

Date

2023

Role

Sole Developer, Architect & AI Engineer

© 2025 Xabier Muruaga. All rights reserved.