Cloud-Native RAG System with LLM Integration

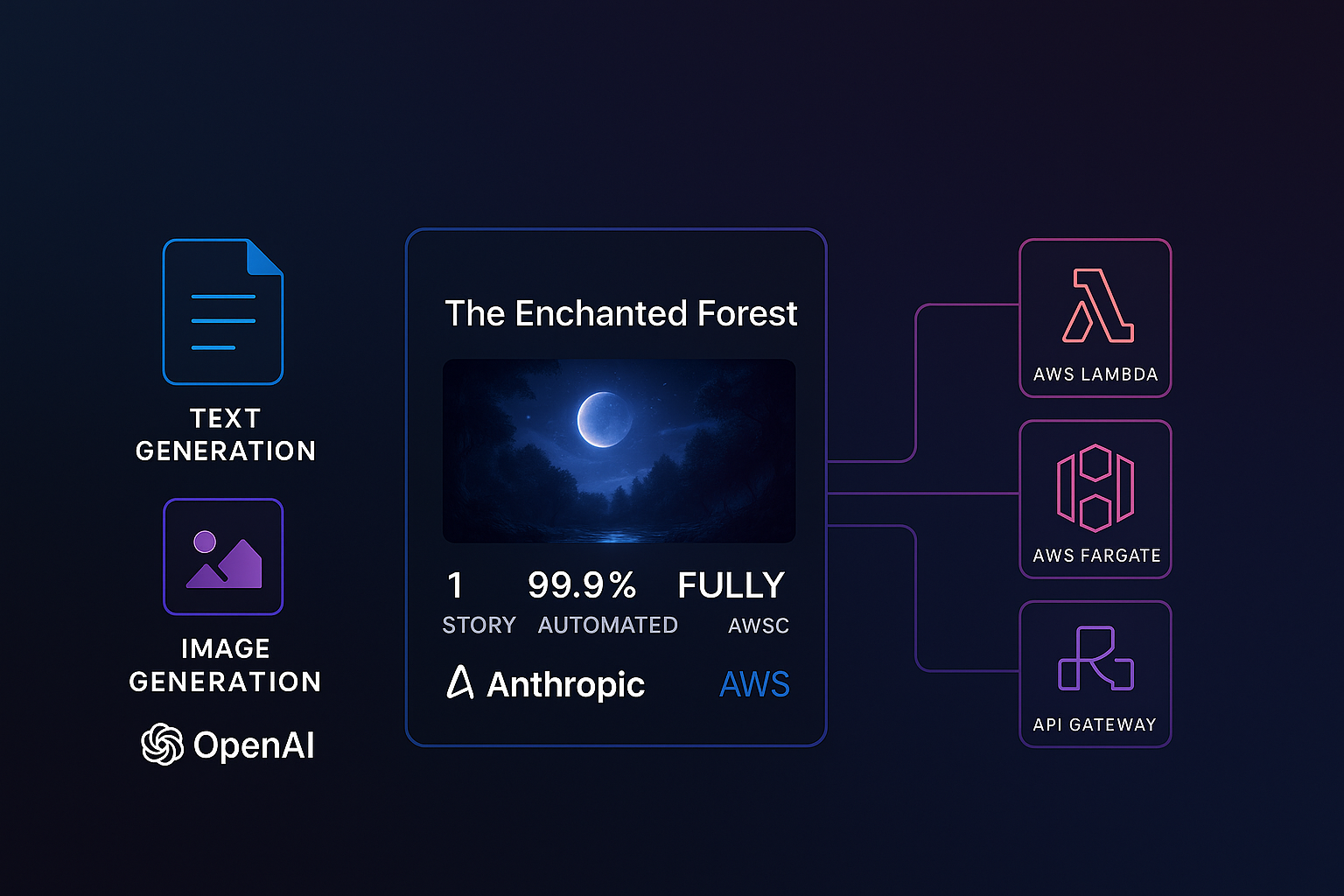

A full-stack, cloud-native Retrieval Augmented Generation (RAG) system leveraging LangChain, LLMs (OpenAI/Anthropic), pgvector, and a comprehensive AWS deployment (Fargate, Lambda) managed by Terraform.

Project Overview

This personal initiative demonstrates the end-to-end development and deployment of a sophisticated Retrieval Augmented Generation (RAG) system. The project focuses on building a scalable, cloud-native solution capable of performing intelligent question-answering over custom datasets by integrating Large Language Models with external knowledge bases. The entire infrastructure is meticulously defined and managed using Terraform on AWS.

The core RAG pipeline, orchestrated with LangChain, ingests documents, creates embeddings (via LLMs), stores them in PostgreSQL with the pgvector extension, and enables semantic retrieval to provide contextually relevant information to LLMs (from OpenAI and Anthropic) for generating accurate and nuanced answers. The backend services, combining Python for AI logic and TypeScript/Node.js for API layers, are deployed as containerized applications on AWS Fargate and serverless functions on AWS Lambda. This architecture includes a full suite of AWS services like API Gateway, Application Load Balancers, S3, IAM, VPC, and CloudWatch.

A dynamic Next.js frontend allows users to interact with the RAG system, submitting queries and receiving AI-generated responses. The project features a robust CI/CD pipeline using GitHub Actions for automated builds, tests, and deployments. Documentation is maintained with Docusaurus 2.

Key Features

- End-to-End RAG Orchestration: Utilized LangChain for building and managing the complete Retrieval Augmented Generation pipeline.

- Efficient Vector Data Store: Implemented PostgreSQL with pgvector for high-performance storage and semantic search of document embeddings.

- Multi-LLM Support: Designed to leverage diverse Large Language Models from OpenAI and Anthropic for embedding and generation tasks.

- Hybrid Backend Architecture: Combined Python (for LangChain, AI processing) with TypeScript/Node.js (for scalable API services).

- Comprehensive Cloud-Native AWS Deployment: Leveraged AWS Fargate, Lambda, API Gateway, ALB, S3, IAM, VPC, & CloudWatch for a resilient and scalable solution.

- Full Infrastructure as Code (IaC): Entire AWS infrastructure provisioned and managed using Terraform.

- Automated CI/CD Pipeline: Implemented automated workflows for build, test, and deployment via GitHub Actions.

- Interactive RAG Interface: Developed a user-friendly frontend with Next.js (React) for querying the system.

Technologies Used

Project Details

Date

2023

Role

Sole Architect, Lead Developer & AI Engineer

© 2025 Xabier Muruaga. All rights reserved.